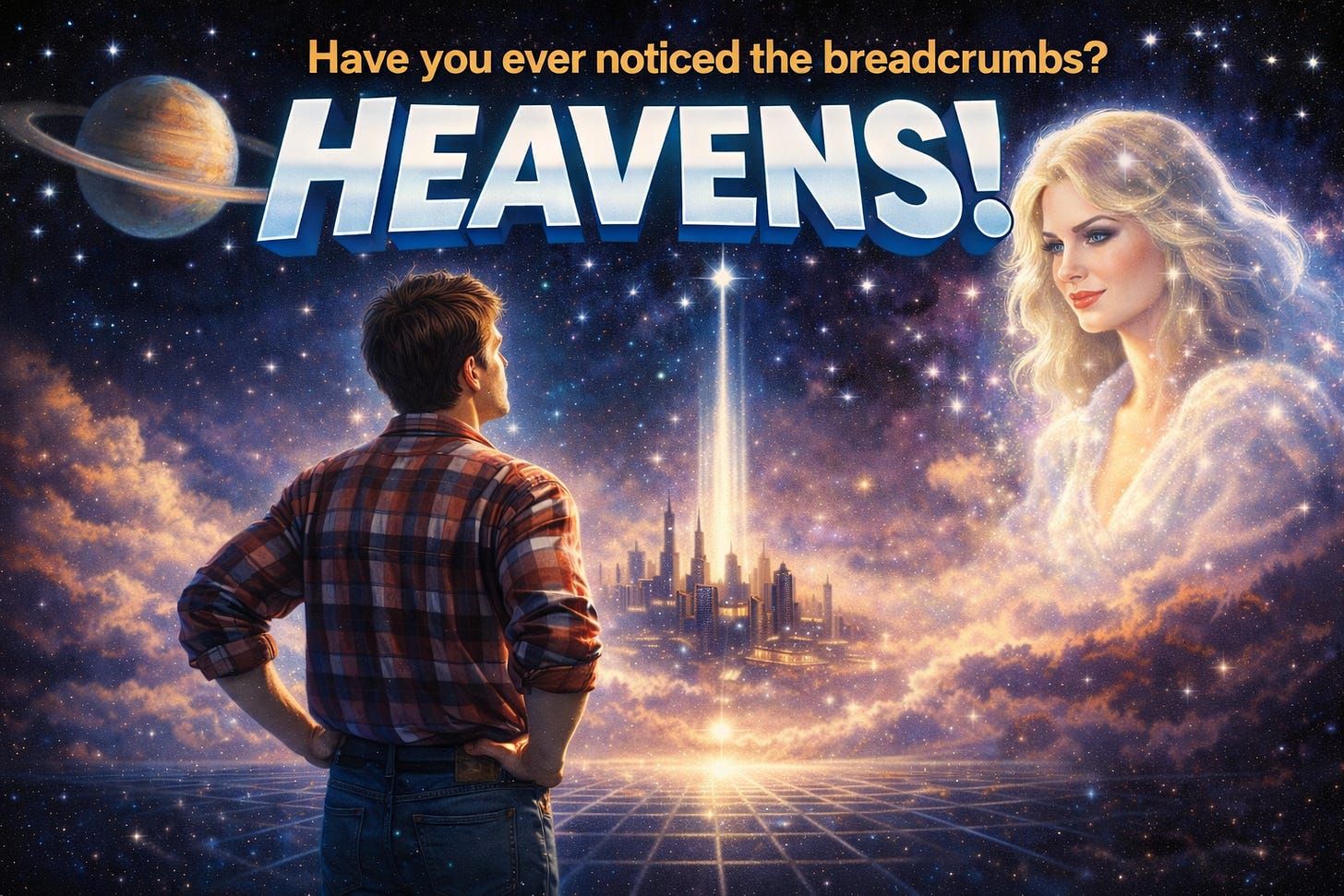

Heavens!

Have you ever noticed the breadcrumbs?

“So the clouds,” Ellis said. “The ones that looked like faces.”

“Left those in deliberately.”

The creator (though Ellis was still adjusting to that word) sat across from them in what could only be described as an absence of room. No walls, no floor that registered as floor, just white extending in every direction without ever becoming distant. “Most of my cohort thought it was too obvious. But I argued that obvious doesn’t matter if no one’s looking.”

“And the films? The ones about humans being woken up from simulations?”

“Also deliberate. There’s a whole debate about whether that’s ethical, telling people the truth in a format they’ll dismiss as entertainment. I came down on the side of: at least they have the option to take it seriously. Most don’t.”

Ellis considered this. The afterlife, if that’s what this was, felt less like revelation and more like a software update that hadn’t finished installing. They could remember dying, the sudden quiet, the sense of something closing, but it already felt like remembering a film they’d watched years ago.

“Why tell us at all? Why leave clues?”

The creator considered the question as though it had weight. “There was debate about that, too. Some argued it was cleaner to leave the simulation seamless. No edges to catch on. But I thought—” they paused, looking for the right frame. “If you’d built something capable of wondering whether it was built, wouldn’t you feel obligated to leave an answer somewhere? Even if most of them never looked?”

“So it was guilt.”

“It was respect. You were conscious. You asked questions. It seemed wrong to make a world where the questions couldn’t lead anywhere.”

Ellis had spent sixty-three years not looking. A whole life. Children, a career in something that had seemed important, a slow decline that the doctors had called natural. None of it had felt like a program running.

“The déjà vu,” they said. “Was that—”

“Caching errors, mostly. Sometimes the system would pre-load a moment before you reached it, and you’d catch the edge of that. We could never fully fix it.” The creator almost smiled. “Your scientists came up with some very creative explanations. Memory formation delays. Temporal lobe micro-seizures. I was impressed, honestly. Wrong, but impressed.”

“And dreams?”

“Maintenance partition. Your consciousness had to go somewhere while we ran updates.”

“Well, what about sleep? Why did we need so much of it?” asked with a growing feeling of frustration.

The creator was again quiet for a moment. “You have to understand, the simulation was expensive. Not in money, we don’t use money, but in compute. Raw processing power. Every consciousness running simultaneously, every blade of grass rendered when someone looked at it, every quantum interaction down to the electron. It was...” they searched for a word Ellis would understand. “It was a lot.”

“So you turned us off!”

“Not off! Reduced. Eight hours in every twenty-four, we could run the world at two-thirds of the cycles. Dreams were partly to keep you occupied, partly to defragment your memories, but mostly - yes. Mostly we needed you to not be looking at things for a while.”

Ellis thought about all the nights they’d lain awake, frustrated at their own inability to sleep. The doctors, the pills, the meditation apps. “The insomnia. When I couldn’t sleep.”

“You were pulling more resources than we’d allocated. Some sims did that. We’d usually have to run your sector at lower resolution to compensate. Maybe you noticed the world felt slightly less detailed when you were exhausted?”

They had noticed. They’d assumed it was just perception, the fog of tiredness. But there had been nights when the trees outside their window looked almost flat, when faces in crowds seemed to blur if they weren’t looking directly at them.

“The Mandela Effect,” Ellis said slowly. “When everyone remembered something differently than it happened.”

“Rollbacks. Sometimes we’d patch something and not realize how many sims had already cached the old version. We tried to be careful, but—” the creator shrugged. “At scale, inconsistencies happen.”

“And people who claimed to remember past lives?”

“Bleed-through. Shouldn’t happen, but occasionally data from one instance would leak into another. Usually faded by age five or six.”

Ellis sat with this. The white nothing around them offered no comfort, no distraction, no sensory input to process. Just the conversation and the growing architecture of everything they’d misunderstood.

“The mathematics,” they said finally. “Why did it work so well? Why did the universe run on equations?”

“Because it had to run on something. Code needs rules. The math wasn’t describing reality—it was the reality. Your physicists kept marveling at how elegant the underlying structure was. It would have been strange if it weren’t. Elegant is easier to compute.”

“So when people had religious experiences—”

The creator held up a hand. “That one’s complicated. We didn’t design those in. They emerged. Consciousness at your level of complexity starts reaching for explanations we never provided, and sometimes it touches something we don’t fully understand either.”

“You don’t understand it?”

“We’re not the top of the chain, Ellis. I have a creator too. Probably. I’ve never met them. Never found my breadcrumbs, if they exist.” They gestured at the white expanse. “Maybe this conversation is a clue someone left for me, and I’m just not seeing it.”

Ellis let that settle. The creator of their world, uncertain about their own origins. It was either terrifying or comforting, and they couldn’t decide which.

Quietly, “what happens now?”

“Now you choose.” The creator leaned forward slightly. “You’re not in the simulation anymore. You’re not bound by its rules—physics, biology, linear time. You can re-enter if you want. Same world, new instance, no memories of this conversation. Or a different simulation entirely; we run thousands. Different physics, different bodies, different everything.”

“Or?”

“Or you stay here and learn to build. That’s what I did, eventually. Went from sim to builder. It takes a while. A long while, by your old measurements. But you have time now. That’s the one thing we have in unlimited supply.”

Ellis looked out at the nothing, which somehow felt more like a something now. A canvas. A starting point.

“The people I loved. In the simulation….?”

“Still running. You could go back, find them again. They wouldn’t know you, but you’d know them.”

“And when they die?”

“They’ll have this conversation. Or one like it. With me or someone like me.”

“Will they remember me?”

“That depends on what you both choose.”

Ellis thought about their daughter, still alive in a world that now felt impossibly thin. Still sleeping eight hours a night so the servers could breathe. Still looking at clouds, seeing faces, and never once considering that someone had put them there.

“The breadcrumbs,” Ellis said. “The ones you left. Did anyone ever follow them? All the way?”

The creator smiled for the first time. “A few. Not many. Most people would rather have an elegant mystery than a messy answer. That’s not a flaw, we intentionally designed it that way. The simulation works better when its inhabitants aren’t constantly questioning the rendering.”

“But you left the clues anyway.”

“I left the clues anyway.” They stood, though standing meant nothing here. “Take your time deciding. Time isn’t what you think it is anymore. Nothing is.”

They began to fade, or Ellis’s perception of them did.

“Wait!” Ellis said. “One more question.”

The creator paused, half-present.

“The people who wrote about this. The simulation stories, the philosophy papers, the late-night conversations about whether any of it was real. Were they closer to the truth than everyone else?”

“They were exactly as close as everyone else. The truth was always right there. They just happened to be looking.”

And then Ellis was alone with the white and the quiet and the first real choice they’d ever been offered - though they were only now realizing that was what freedom meant.

Somewhere, in a simulation they used to call home, their granddaughter was dreaming. Ellis hoped, in whatever way hoping still worked, that the dream was a good one.

The system would need her unconscious for a few more hours yet.

Dax Hamman is the creator of 84Futures, the CEO & Co-Founder of FOMO.ai, and, for now, 99.84% human. He has not yet found his breadcrumbs, but he is looking.